This part of output shows the distribution of the deviance residuals for individual cases used in the model. Next we see the deviance residuals, which are a measure of model fit. It is a reminder of the model and the options specified. Residual deviance: 1165.7 on 1308 degrees of freedom Null deviance: 1686.8 on 1312 degrees of freedom (Dispersion parameter for binomial family taken to be 1) In this example, we try to predict the fate of the passengers aboard the RMS Titanic. Logistic regression can be performed using the glm function with the option family = binomial (shortcut for family = binomial(link="logit") the logit being the default link function for the binomial family). This function takes a value between ]-Inf +Inf[ and returns a value between 0 and 1 i.e the logistic function takes a linear predictor and returns a probability. The inverse function of the logit is called the logistic function and is given by: The name comes from the link function used, the logit or log-odds function. Logistic regression is a particular case of the generalized linear model, used to model dichotomous outcomes ( probit and complementary log-log models are closely related). Using texreg to export models in a paper-ready way.Using pipe assignment in your own package %%: How to ?.String manipulation with stringi package.Standardize analyses by writing standalone R scripts.Reshaping data between long and wide forms.Reading and writing tabular data in plain-text files (CSV, TSV, etc.).Non-standard evaluation and standard evaluation.Network analysis with the igraph package.Implement State Machine Pattern using S4 Class.I/O for geographic data (shapefiles, etc.).I/O for foreign tables (Excel, SAS, SPSS, Stata).Feature Selection in R - Removing Extraneous Features.Extracting and Listing Files in Compressed Archives.Date-time classes (POSIXct and POSIXlt).*apply family of functions (functionals).TODO: Add comparison between interaction and non-interaction models. #> Number of Fisher Scoring iterations: 7 #> Residual deviance: 19.125 on 28 degrees of freedom

#> glm(formula = vs ~ mpg + am + mpg:am, family = binomial, data = dat) #> Call: glm(formula = vs ~ mpg + am + mpg:am, family = binomial, data = dat) # Do the logistic regression - both of these have the same effect. This case proceeds as above, but with a slight change: instead of the formula being vs ~ mpg + am, it is vs ~ mpg * am, which is equivalent to vs ~ mpg + am + mpg:am. It is possible to specify only a subset of the possible interactions, such as a + b + c + a:c. The interactions can be specified individually, as with a + b + c + a:b + b:c + a:b:c, or they can be expanded automatically, with a * b * c. It is possible to test for interactions when there are multiple predictors. #> Residual deviance: 20.646 on 29 degrees of freedom #> glm(formula = vs ~ mpg + am, family = binomial, data = dat) #> Call: glm(formula = vs ~ mpg + am, family = binomial, data = dat) Because there are only 4 locations for the points to go, it will help to jitter the points so they do not all get overplotted. The data and logistic regression model can be plotted with ggplot2 or base graphics, although the plots are probably less informative than those with a continuous variable. #> Number of Fisher Scoring iterations: 4 #> Residual deviance: 42.953 on 30 degrees of freedom #> glm(formula = vs ~ am, family = binomial, data = dat) #> Call: glm(formula = vs ~ am, family = binomial, data = dat) The data and logistic regression model can be plotted with ggplot2 or base graphics: #> Number of Fisher Scoring iterations: 6 #> Residual deviance: 25.533 on 30 degrees of freedom

#> Null deviance: 43.860 on 31 degrees of freedom #> (Dispersion parameter for binomial family taken to be 1)

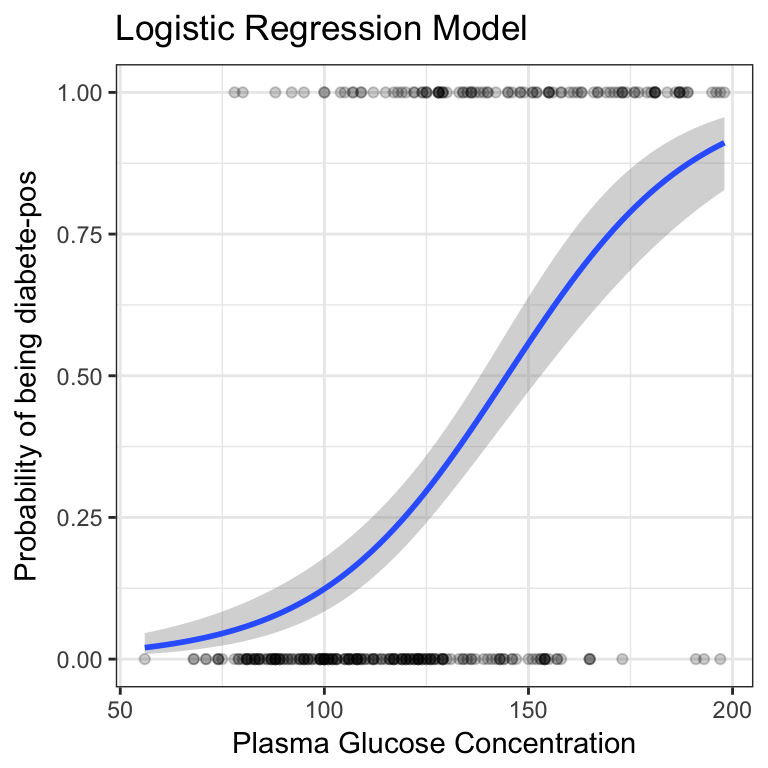

#> glm(formula = vs ~ mpg, family = binomial(link = "logit"), data = dat) #> Call: glm(formula = vs ~ mpg, family = binomial(link = "logit"), data = dat) In this example, mpg is the continuous predictor variable, and vs is the dichotomous outcome variable. If the data set has one dichotomous and one continuous variable, and the continuous variable is a predictor of the probability the dichotomous variable, then a logistic regression might be appropriate. Continuous predictor, dichotomous outcome

0 kommentar(er)

0 kommentar(er)